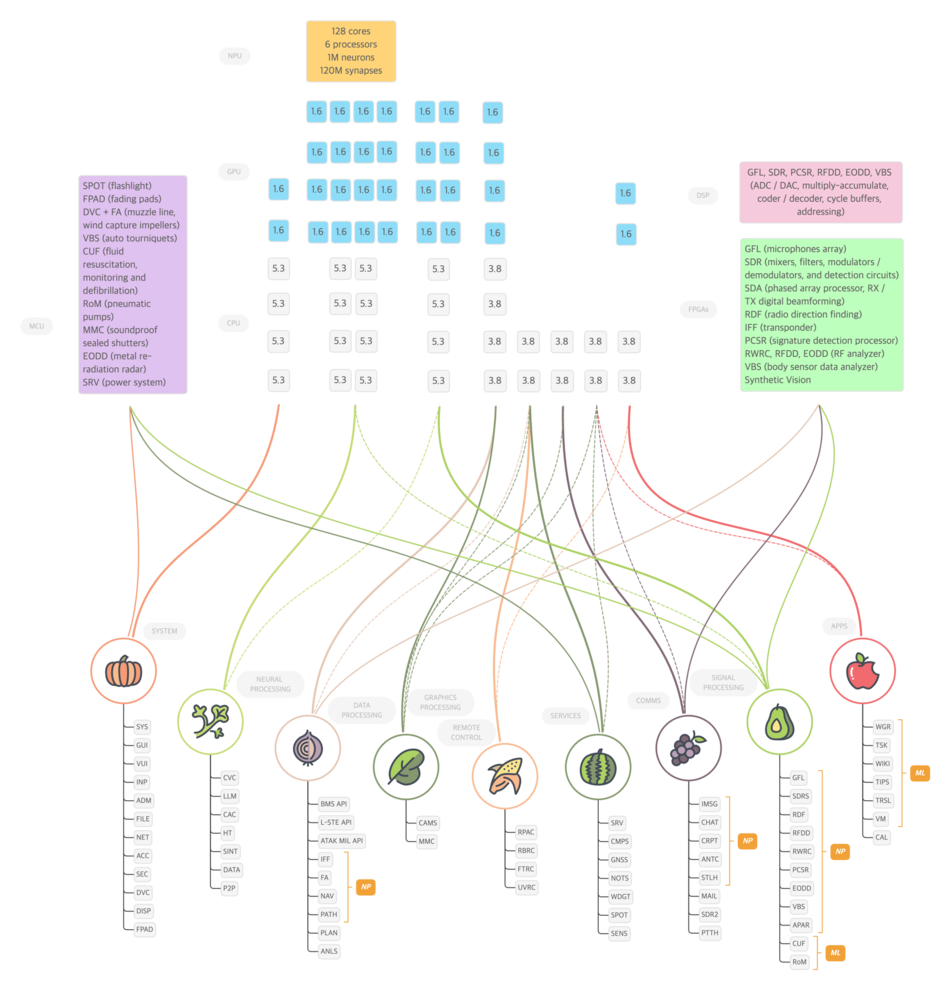

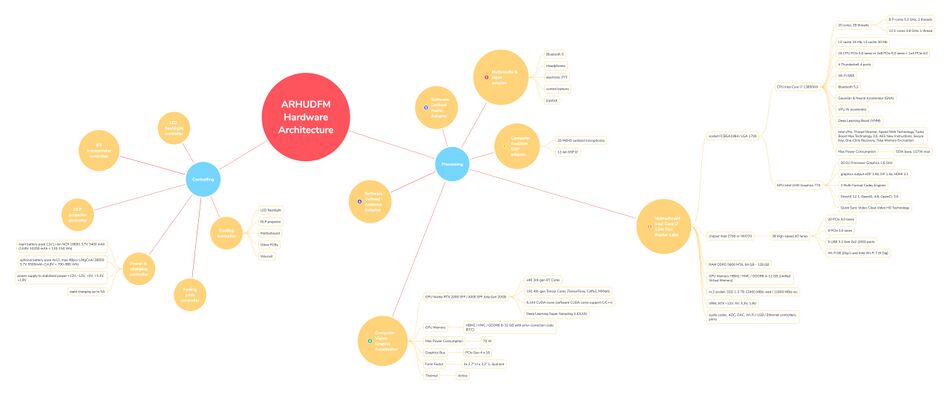

Public:Applications

Abstract

![]() →redirect Further information: Public:ARHUDFM Manifesto, Public:Graphical User Interface, Public:ARHUDFM Features Summary, Public:DoD_Pains

→redirect Further information: Public:ARHUDFM Manifesto, Public:Graphical User Interface, Public:ARHUDFM Features Summary, Public:DoD_Pains

Augmented Reality Heald-Up Display Fullface Mask (ARHUDFM) is a complex device that is not easy to understand at a first look, because there are little relevant examples to compare. Therefore, we suggest that you first read the information on the Public:ARHUDFM Manifesto page.

This page describes the structure and contents of the applications used in the ARHUDFM device.

App names in alphabetic order

![]() →redirect Further information: Public:Graphical User Interface, Public:Voice User Interface

→redirect Further information: Public:Graphical User Interface, Public:Voice User Interface

The applications are listed here in alphabetical order of their abbreviated names. Links are active.

| A | B | C | D | E | F | G | H | I | J | K | L | M |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ACC | BSVE | CAC | DISP | EODD | FA | 4/5G | HF | IFF | ||||

| ADM | BT | CAL | DRON | FILE | GFL | HT | IMSG | MAP | ||||

| ANLS | CAM | DVC | FPAD | GNSS | INP | MMD | ||||||

| ANTC | CHAT | FTRC | MMC | |||||||||

| APAR | CRPT | MSG | ||||||||||

| CVC |

| N | O | P | Q | R | S | T | U | V | W | X | Y | Z |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| NAV | P2P | RADR | SDA | TESS | UHF | VBS | WGR | |||||

| NET | PATH | RBRC | SDR2 | TIPS | UVRC | VHF | WIKI | |||||

| NOTS | PCSR | RC | SDRS | TIME | VM | WLAN | ||||||

| PLAN | RDF | SEC | TRSL | VOVR | WSVE | |||||||

| PLAY | REC | SENS | TSK | |||||||||

| PROC | RFDD | SGHT | ||||||||||

| PTTH | RPAC | SINT | ||||||||||

| RWRC | SPOT | |||||||||||

| SRV | ||||||||||||

| STM | ||||||||||||

| STT | ||||||||||||

| SYS |

Application groups

Here are the groups of applications. Links are active.

![]() →redirect Further information: Public:Graphical User Interface, Public:Voice User Interface

→redirect Further information: Public:Graphical User Interface, Public:Voice User Interface

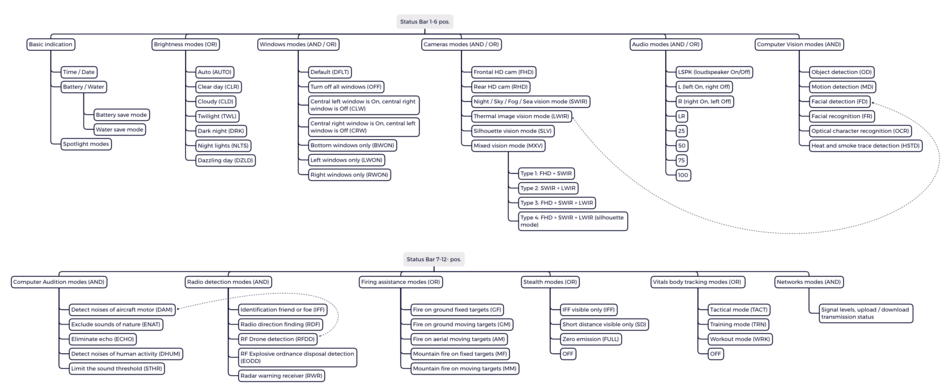

This shows where the above applications are located in the status menu structure with a path to the app.

Status Bar

Main Menu

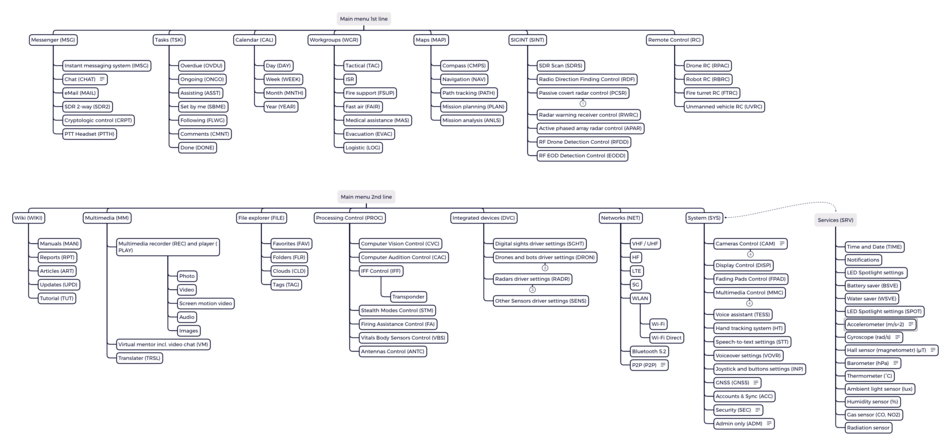

This shows where the above applications are located in the main menu structure with a short note.

For a brief description of the application's functions, see "SB Presets" and " Left main menu (LMM) and Right submenu (RSB )". A more meaningful explanation is found at the bottom of the table in the following sections. You will find a detailed description of the functionality of the applications on the pages of the same name for each application, see the links in the sections below and at the bottom of each page of the project.

| Indication | Left Main menu item name | Indication | Right Submenu Apps or Filters item name | Notice |

|---|---|---|---|---|

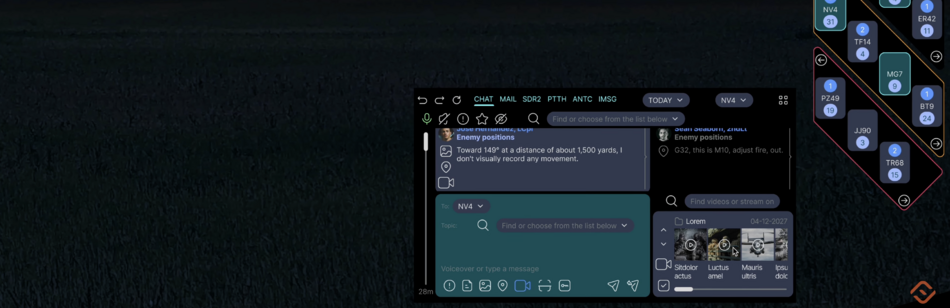

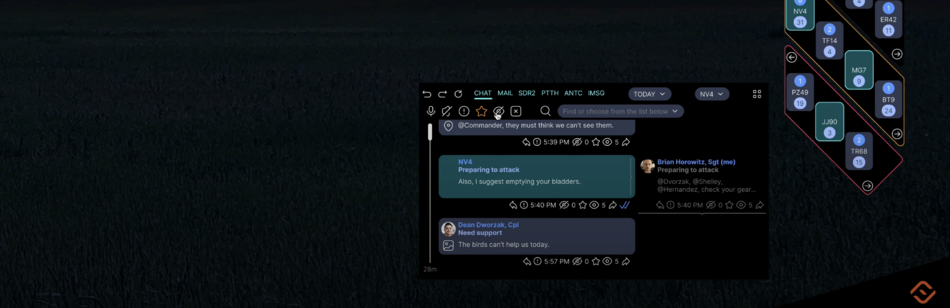

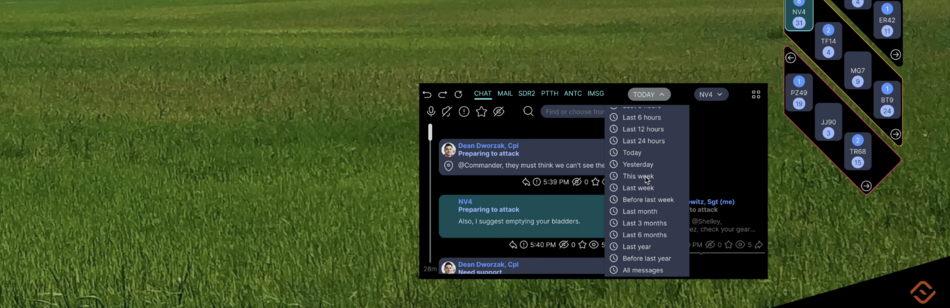

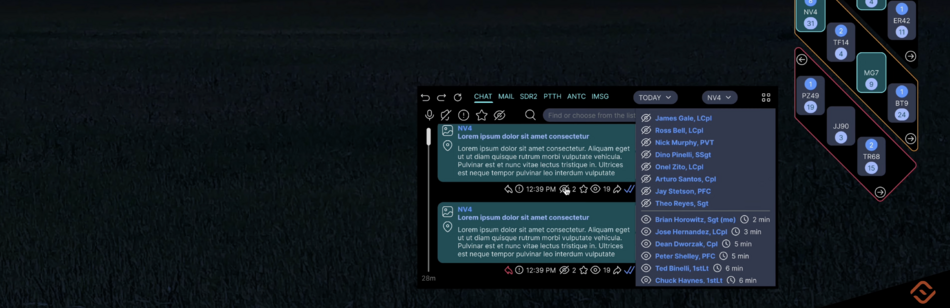

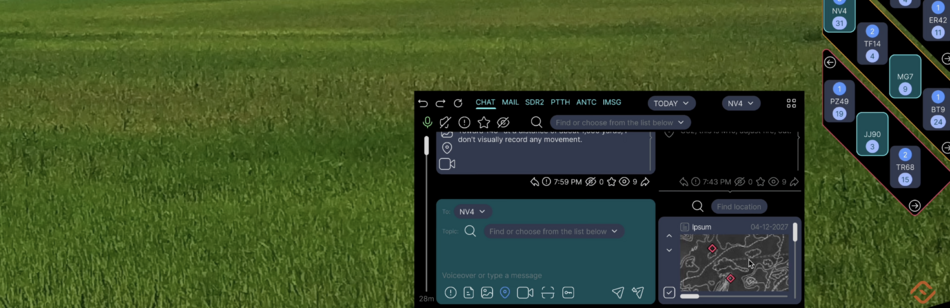

| MSG | Messenger | IMSG | Instant messaging system | Large characters appear on the screen and are read by other users instantly. Messages are generated automatically based on the sender's voice commands and gestures. Special operations forces are familiar with this system. We have improved it. |

| CHAT | Chat | Uses Speech-to-text, Voiceover services, TESS voice assistant, so text input and reading is possible but not required | ||

| eMail client | ||||

| SDR2 | SDR 2-way | This is a built-in software-defined radio for communications, which allows you to use much more features (frequencies, modulations, encryption) than the radios used | ||

| CRPT | Cryptologic control | |||

| PTTH | PTT Headset | A software headset that allows you to use a portable handheld radio without using your hands or with buttons on the ear cup of the left headphone earpiece | ||

| TSK | Tasks | OVRD | Overdue | |

| ONGO | Ongoing | |||

| ASST | Assisting | |||

| SBME | Set by me | |||

| FLLW | Following | |||

| CMNT | Comments | |||

| DONE | Done | |||

| CAL | Calendar | DAY | Day | |

| WEEK | Week | |||

| MNTH | Month | |||

| YEAR | Year | |||

| WGR | Workgroups | TACT | Tactical | |

| ISR | ISR | |||

| FIRE | Fire support | |||

| FAIR | Fast air | |||

| MED | Medical assist | |||

| EVAC | Evacuation | |||

| LOG | Logistic | |||

| MAP | Map and Navigation | NAV | Navigation | |

| PATH | Path tracking | |||

| PLAN | Mission planning | |||

| ANLS | Mission analyzing | |||

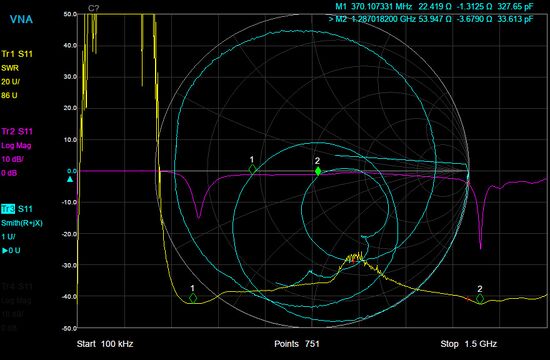

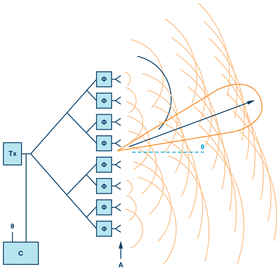

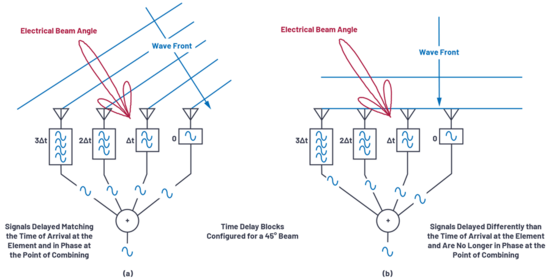

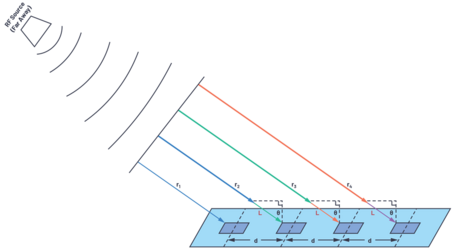

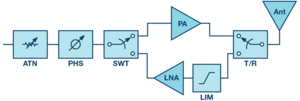

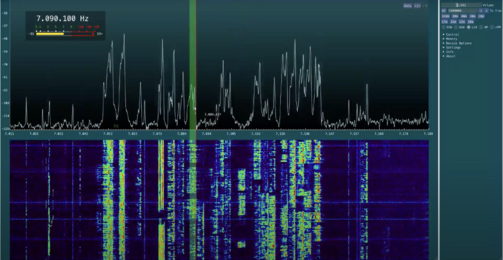

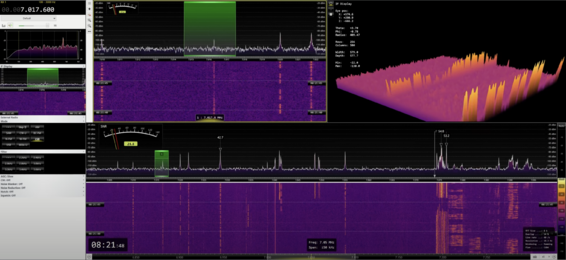

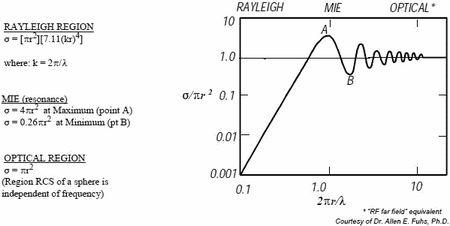

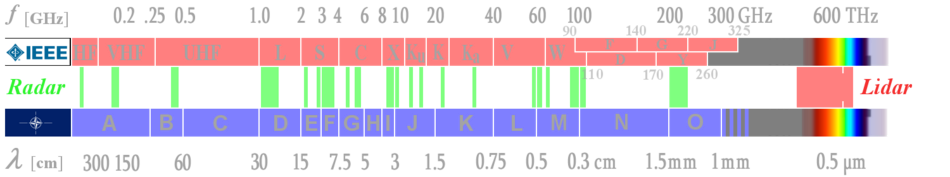

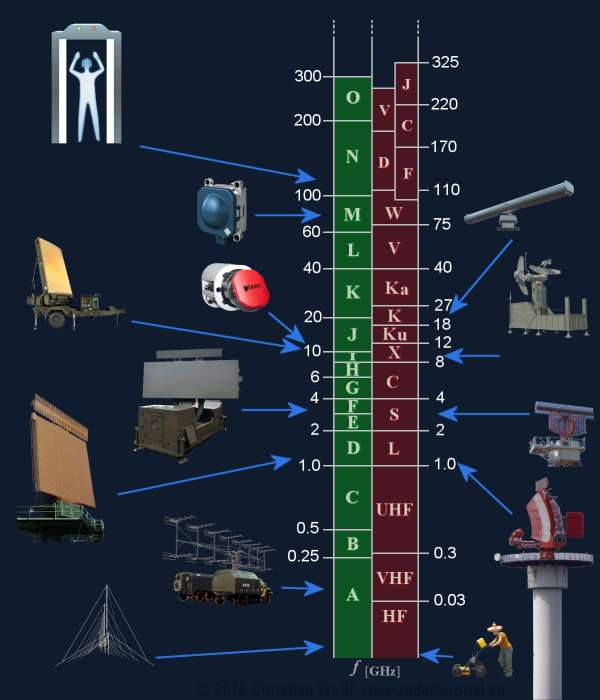

| SINT | Signal Intelligence | SDRS | SDR Scan | 2 kHz - 6 GHz, essential component for IFF, RPAC apps |

| RDF | Radio direction finding control | Generic SIGINT element and geospatial positioning | ||

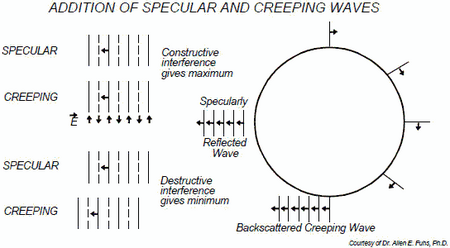

| PCSR | Passive covert radar control | Active electronically scanned array (AESA) receive module only | ||

| RWRC | Radar warning receiver control | |||

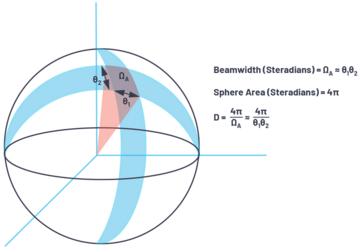

| APAR | Active phased array radar control | |||

| RFDD | RF Drone detection control | |||

| EODD | RF EOD detection control | |||

| RC | Remote Control | RPAC | Drone RC | |

| RBRC | Robot RC | |||

| FTRC | Fire turret RC | |||

| UVRC | Unmanned vehicle RC | |||

| WIKI | Wiki | MAN | Manuals | |

| RPT | Reports | |||

| ART | Articles | |||

| UPD | Updates | |||

| TUT | Tutorial | |||

| MMD | Multimedia | REC | Multimedia recorder | Photo, video, screen motion, screenshot |

| PLAY | Multimedia player | Photo, video, screen motion, screenshot, images | ||

| VM | Virtual mentor | incl. video chat | ||

| TRSL | Translater | Translation in both directions | ||

| FILE | File explorer | FAV | Favourites | |

| FLR | Folders | |||

| CLD | Clouds | |||

| TAG | Tags | |||

| PROC | Processing control | CVC | Computer Vision control | |

| CAC | Computer Audiion control | |||

| IFF | IFF control | incl. transponder settings | ||

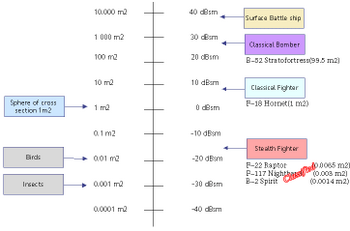

| STM | Stealth modes control | |||

| FA | Firing Assistance control | |||

| VBS | Vitals Body sensors control | |||

| ANTC | Antennas control | |||

| DVC | Integrated devices | SGHT | Digital sights driver settings | |

| DRON | Drones and bots driver settings | RPA / UAV, Fire turret, Dog bots | ||

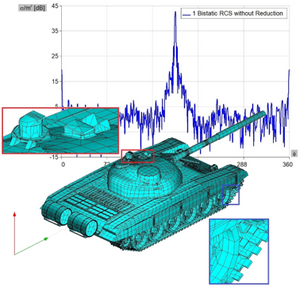

| RADR | Radars driver settings | Anti-UAV radar, AESA transmit-receive module - TRM (remote control) small tactical radar, Metal re-radiation radar (transmit-receive module) | ||

| SENS | Other Sensors driver settings | |||

| NET | Networks | VHF | VHF | |

| UHF | UHF | |||

| HF | HF | |||

| 4/5G | LTE and 5G | |||

| WLAN | WLAN | Wi-Fi, Wi-Fi Direct | ||

| BT | Bluetooth 5.2 | |||

| P2P | P2P | Multichannel P2P network, user view exchange, multi-party computing | ||

| SYS | System | CAM | Cameras control | Zoom, filters, modes, mixed, calibration, stereo and external cam modes |

| DISP | Display control | |||

| FPAD | Fading Pads control | |||

| MMC | Multimedia control | Headphones, microphones, handheld radio, loudspeaker | ||

| TESS | Voice assistant | |||

| HT | Hand tracking system | |||

| STT | Speech-to-text settings | |||

| VOVR | Voiceover settings | |||

| INP | Joystick and buttons settings | |||

| GNSS | GNSS | GPS, Galileo, QZSS | ||

| ACC | Accounts & Sync | |||

| SEC | Security | Password and security, emergency alert, SOS settings | ||

| ADM | Admin only | OS, roles, remote administration, clearance, data protect, logs, system performance, etc. | ||

| SRV | Services | Time and Date, Notifications, User tips, LED Spotlight settings, Battery saver, Water saver, Accelerometer, Gyroscope, Hall sensor, Barometer, Thermometer, Ambient light sensor, Humidity sensor, Gas sensor (CO, NO2), Radiation sensor |

Brief of augmented visual perception apps functionality

Here we briefly describe the functionality of the apps. You will find a more detailed description of the applications on their main articles pages. See the links below.

CAM (Cameras control)

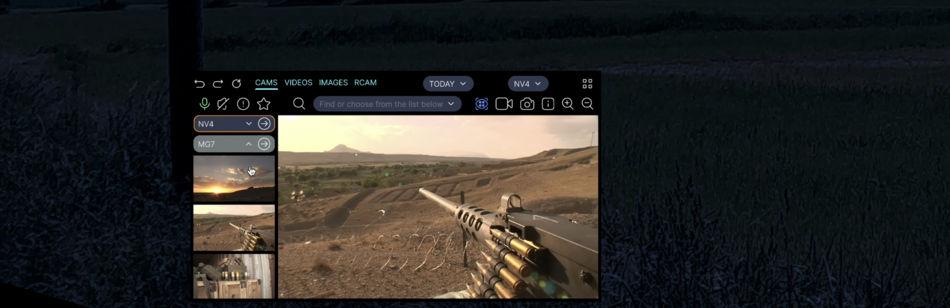

![]() →redirect Main Article: Cameras Control, Night Vision, Computer Vision Control

→redirect Main Article: Cameras Control, Night Vision, Computer Vision Control

Features

Goal 1. See beyond human vision

- digital zoom up to 24x with interpolation

- HD, HDR (high dynamic range), SWIR (short-wave infrared), LWIR (long-wave infrared) range of light waves day and night in any weather

- dynamic transformation (software filters to improve visibility)

- mixed vision (edge enhancement, contrast color enhancement)

- rear view camera

- sight camera allows you to have a viewpoint from behind cover

- drone or robot camera allows you to see from another viewpoint (aerial view, robot view, another user view)

Goal 2. Use visual content

- stereoscopic vision allows using triangulation to measure the distance, size of objects and speed (when moving towards, approach speed and angular speed along the horizon) without emitting a laser beam (the laser beam unmasks the user)

- built-in cameras capture images of the hands for the gesture control system (Hand tracking)

- built-in and external cameras capture images for the Computer Vision system (detection, recognition)

- the image can be recorded with different quality, bit rate[1] (compression ratio), frame rate, to share with others or use later

The application starts in startup mode.

Advantages

- digital cameras and optics for them are more compact and have less mass, less risk of damage than conventional optics

- digital cameras are multifunctional and cheaper than conventional optics (more features for the same money)

- SWIR mode, in addition to night vision, is suitable for daytime use: vision through fog, observation of the sky, objects on the water surface, visibility of people in camouflage and camouflaged objects, booby traps, plastic and metal mines, technical inspection of equipment and objects

- LWIR mode, in addition to night vision, is suitable for daytime observation of the battlefield (hot barrels, footprints, massive metal camouflaged objects, people in camouflage, especially exposed skin, at ambient temperatures below 85°F, especially when solar activity is favorable, which forms temperature contrasts

Hardware

- Image capture with a three-sensor front stereo camera. One lens transmits the image to the separating prisms and then to 3 sensors.

- (a) DSLR 64 MP Hawk-eye, diagonal 9.25 mm (7.4 x 5.55 mm), type 1/1.7" (9,152 x 6,944 px, 1.32:1, x16 zoom, manual / autofocus) IR-cut filter removable, view angle 84° or 12K sensor 80MP (12,288 x 9,310, 1.32:1, x24 zoom, adaptive adjacent pixel interpolation algorithms based on pixel-by-pixel analysis - sharp edges, smooth structure, fine details)[2]

- (b) SenSWIR SONY IMX992, 400-1700 nm, InGaAs (indium gallium arsenide) layer for photoelectric conversion , approx. 5.32 megapixels, type 1/1.4 (11.4 mm diagonal), 3.45 μm, 2134 ppi, QSXGA (2560 x 2048 pixels), 130 fps (ADC 8-bit), 120 fps (ADC 10-bit), 70 fps (ADC 12-bit), Sensitivity TBD

[3][4][5][6][7], also used as the only rear camera sensor

- Camera in LWIR range (thermal image vision)

- Rear view camera in extended visible light range and SWIR

- If necessary, additional sensors are installed using NVG mount

Interface examples

Insert picture for example

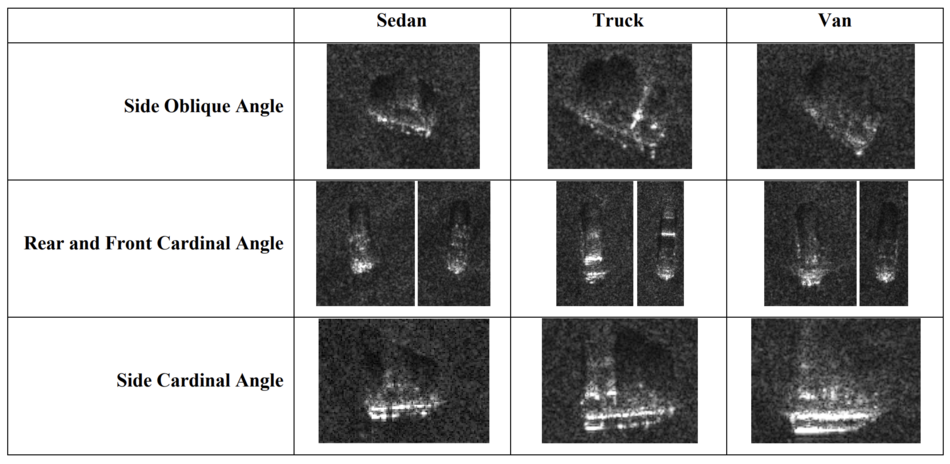

CVC (Computer Vision Control)

![]() →redirect Main Article: Computer Vision Control, Night Vision

→redirect Main Article: Computer Vision Control, Night Vision

Features

The dataset of known graphic patterns developed with Deep Learning (AI) is updated from the cloud on the client to the local SSD during the update. During operation, the built-in detection and recognition system based on Machine Learning (AI) technologies (possibly at a speed of 4-12 frames per second) analyzes images in order to identify known patterns. This requires significantly less computing power and time.[8] At the same time, each client (device) saves samples of the results each time and sends them to the server to replenish the dataset and improve the algorithms. CPU and GPU devices are configured in such a way that part of the processing power is reserved only for individual tasks that are processed in parallel threads.

Despite the fact that high image quality is not required for high accuracy of Computer Vision, and some algorithms even take into account adjacent areas between pixels during digital zooming and interpolation taking into account neighboring pixels, we going to create the capability during detection and recognition to use more information than required. Therefore, the same object on the client device will have information from 3 sensors in different light ranges and several frames sequences from each sensor. In total, up to 20-30 images containing information about one object at one time can be analyzed among themselves, and then the resulting patterns will be analyzed again based on the dataset.

In some cases, we provide for the most complex cases of face recognition in a large stream to use the shared (distributed) computing resources of the P2P (peer-to-peer) network. In this combination, data processing will be performed in parallel threads on different devices, and the results will be available to everyone equally or depending on the settings.

One of the advantages of this ARHUDFM technology is the ability to use other data for clarification. So, for example, the same object from a different viewpoint could be detected by another user of the device or using an external camera (drone, robot, sight) and this information, taking into account the distance, the angle of light on the object and the viewpoint, may contain more data. Data exchange within the P2P network allows you to combine the efforts of several devices for identification. Another capability is to use Computer Audition technology in parallel for identification by sound patterns. And the third capability is to use radio wave detection for inanimate objects (SDRS, RDF, passive radar, metal re-radiation radar). The synergy of multiple detection technologies running in parallel threads and integrated with each other offers exciting potential opportunities

The application starts when one of the presets is enabled. Does not start by default.

Presets:

- OD (Object detection) based on a trained neural network (AI) allows you to detect people, equipment, drones, artificial structures from different angles and at different distances, highlighting them on the screen, measuring range, azimuth, elevation, height above the ground, speed, course direction and geopositions, as well as displaying them on the navigation reticle

- Object Detection will be able to detect anti-tank and anti-personnel mines (including plastic mines), trip wires, pendants, IEDs on the ground and drones above with high accuracy

- MD (Motion detection) helps to detect moving objects, people and equipment, ignoring the flights of birds and insects, the movement of clouds. MD mode is recommended to be used in conjunction with Radio Frequency Drone Detection (RFDD) and Computer Audition Detect noises of aircraft motor (DAM) mode

- FD (Facial detection) incl. using LWIR camera sensor and trained neural network helps to detect people's faces

- FR (Facial recognition) using a neural network in communication with the server allows to recognize people's faces for identification (part of the dataset can be stored on a local drive)

- OCR (Optical character recognition) allows to recognize text and other graphic characters for subsequent translation and documentation

- HSTD (Heat and smoke trace detection) helps detect aircraft and missile paths, artillery and mortar fire from closed positions

Interface examples

Insert picture for example

DISP (Display control)

![]() →redirect Main Article: Display Control

→redirect Main Article: Display Control

Features

The application starts in startup mode.

The application controls the modes:

- Windows modes

- Brightness modes

Interface examples

Insert picture for example

FPAD (Fading Pads control)

![]() →redirect Main Article: Fading Pads Control

→redirect Main Article: Fading Pads Control

Features

The application starts in startup mode. The application controls the degree of transparency of the fading pads. The interface contains 58 fading pads, which, depending on the ambient light or the mode selected by the user, instantly change their transparency from 0% (opaque black) to 100% (fully transparent). This is necessary to comply with the interface principles.

When the contrast is sufficient to display most applications in windows, the transparency should remain, because this enables the user to be more aware of the environment. It has the ability to better respond to movement in its field of vision.

Fading windows with varying level of shading can act as screens against dazzling sunlight. The surface of the outer visor is magnetron-coated, creating a filter against UV sunlight, so such screens allow the user not to use sunglasses inside the mask. Users can use optical eyeglasses without restrictions, for this the geometry features are taken into account in the design. The problem of glasses and visor fogging, as it is solved in the device, is described in the Features Summary.

As a rule, windows can be in several appearances, depending on the need for maximization:

- 1:1 - window in its standard minimum size

- 1:2 - window combines two horizontally adjacent

- 2:1 - window combines two vertically adjacent

- a large window combines four windows on the left or right

Interface examples

Insert picture for example

HT (Hand tracking system)

![]() →redirect Main Article: Hand Tracking

→redirect Main Article: Hand Tracking

Features

The application starts in startup mode. The application allows to control the interface using gestures.

- The stereo camera captures the image of the hands and recognizes the user's gestures

- Gesture library constantly updated, Machine Learning allows the user to create custom gestures

- Gestures can indicate not only the state, but also the dynamics, for example, smoothly change the volume level, brightness, etc.

- Capturing and recognizing fingers allows to use software interface controls, including scrollbars, buttons (and a virtual keyboard if necessary), swipe sideways and up and down, scale and rotate screens inside a window, hide and move windows

Interface examples

Insert picture for example

Brief of mixed audial perception apps functionality

Here we briefly describe the functionality of the apps. You will find a more detailed description of the applications on their main articles pages. See the links below.

MMC (Multimedia control)

![]() →redirect Main Article: Multimedia Control

→redirect Main Article: Multimedia Control

Features

The application starts in startup mode. The application allows to configure presets and work with different applications for multimedia devices: headphones, embedded microphones, handheld radio through the built-in hardware headset and voice software headset, loudspeaker.

2 insulating layers, airway obturator and seal around face, make the user's voice almost inaudible from the outside. On the other hand, Active noise reduction is applied to dampen unnecessary sound pressure. We assume that the level of counteraction (noise reduction) using this technology will be able to reach ∆80 dB SPL to 15-85 dB SPL (upper limit of normal). However, this unit is an Audial Augmentation type, so the openings in the bottom of the headphones ear cups are permanently open for the perception of the surrounding sound environment with minimal attenuation (less than 10 dB SPL). When the threshold values of the ambient sound pressure are exceeded, these openings are closed with soundproof sealed shutters. The response time of the electromagnetic pushers of the soundproof damper drives is less than 3 ms (0.003 s).

Hardware

- Built-in MEMS (Micro-Electro-Mechanical-Systems) microphone (1) in mask obturator airway to transmit the user's voice

- If the lower part of the mask is not used, a boom microphone (2) with a windproof pad on the flexible goosneck is connected

- 19 cardioid MEMS (dome) microphones allow to perceive sound waves in a wide range of frequencies, including those inaudible to humans, from very low to very high, used in Computer Audition modes, incl. Active noise reduction

- The super-cardioiod and ultra-cardioid microphones with a narrow directivity pattern on the front of the mask allow to accurately determine the source of the sound wave

- Loudspeaker with amplification power up to ∆50 dB SPL allows the user not to shout, but the surrounding people will hear his voice at a distance of several tens of meters, even in conditions of high ambient noise

- Full-size headphones are equipped with large ear cups and ear cushions made of hypoallergenic elastic polyurethane, which are pressed against the head ("circum-aural" - “around the ear”).

- With prolonged use of any headphones, especially indoors and in the heat, the ears usually sweat. In this design, the openings in the bottom of the headphones ear cups are permanently open and provide ventilation

- In places where ear cushions adjoin the scalp, it is recommended to apply a dry antiperspirant cream on the surface of the ear cushions.

Interface examples

Insert picture for example

TESS (Voice assistant)

![]() →redirect Main Article: Voice Assistant

→redirect Main Article: Voice Assistant

Features

The application starts in startup mode. The application is a small language model with the ability to learn on the dataset of a particular user and many users of this platform. Applies AI methods.

Voice commands can be pronounced differently with different accents, so definitive voice commands are not effective (nobody will memorize dozens of voice commands). Instead, a voice assistant is used.

Interface examples

Insert picture for example

STT (Speech-to-text)

![]() →redirect Main Article: Speech-to-text

→redirect Main Article: Speech-to-text

Features

The application starts in startup mode. The application converts the user's voice into text. Applies AI methods.

Voice communication converted to text takes up much less space during transmission, has no distortion, and allows to increase the complexity of encryption when transmitting messages.

Interface examples

Insert picture for example

VOVR (Voiceover)

![]() →redirect Main Article: Voiceover

→redirect Main Article: Voiceover

Features

The application starts in startup mode. The application converts text to voice (speech synthesis). Thus, for example, incoming messages in CHAT can be listened to rather than read.

Interface examples

Insert picture for example

CAC (Computer Audition control)

![]() →redirect Main Article: Computer Audition Control

→redirect Main Article: Computer Audition Control

Features

The dataset of known sound patterns developed with Deep Learning (AI) is updated from the cloud on the client to the local SSD during the update. During operation, the built-in detection and recognition system based on Machine Learning (AI) technologies analyzes sound patterns in order to identify known patterns. This requires significantly less computing power and time. At the same time, each client (device) saves samples of the results each time and sends them to the server to replenish the dataset and improve the algorithms. CPU and GPU devices are configured in such a way that part of the processing power is reserved only for individual tasks that are processed in parallel threads.

One of the advantages of this ARHUDFM technology is the ability to use other data for clarification. So, for example, the same sound source from another hearpoint could be detected by another user of the device, and this information, taking into account the distance and hearpoint, may contain more data. Data exchange within the P2P network allows to combine the efforts of several devices for identification. Another capability is to use Computer Vision technology in parallel for identification by graphic patterns. And the third capability is to use radio wave detection for inanimate objects (SDRS, RDF, passive radar, metal re-radiation radar). The synergy of several detection technologies, running in parallel and integrated with each other, provides exciting potential.

The application starts when one of the presets is enabled. Does not start by default.

presets:

- DAM (Detect noises of aircraft motor), unlike DHUM, works only with patterns of aerodynamic, electromechanical and mechanical noise, using a trained neural network to isolate and amplify sounds and noises that are most likely associated with the operation of drones, helicopters, turboprops and jet aircraft. This preset allows to focus on likely threats from the air and determine the direction of the source of sound waves (azimuth, elevation) and distance[9] (with an error of less than 4% for wind speed and direction), course direction and geospatial position, as well as displaying them on the navigation grid . DAM mode is recommended to be used in conjunction with Radio Frequency Drone Detection (RFDD) and Computer Vision Motion Detect (MD) mode

- ENAT sequentially selects the sounds and noises of nature (wind, rustle of leaves, birdsong, water sound, etc.) in several frequency ranges using filters, in order to then use a trained neural network to select patterns for final filtering. This preset allows to focus on the sounds and noises associated with human activity

- ECHO, using built-in algorithms, allows to detect primary and reflected sound waves in order to correct the direction of the source of sound waves when other presets are working. Prompts the user when to enable this preset if one of the other presets is already enabled

- Unlike ENAT, DHUM works in the opposite direction, using a trained neural network to isolate and amplify sounds and noises that are most likely associated with human activity (tire friction, footstep sounds, stone friction, radio speaker sound, engine sounds, electromechanical noise, metallic knock, coughing, sneezing, speech, etc.). This preset highlights these patterns of audio frequencies, amplifies them, while attenuating the sound level of the environment. This preset allows to focus on the sounds and noises associated with human activity, and determine the direction of the source of sound waves. This preset also uses the method of acoustic location and determining the coordinates of the enemy fire battery based on the sound of firing its guns (or mortars, or rockets)[10]

- The STHR assists the user in variable high ambient sound pressure conditions by dynamically reducing the maximum level by ∆ 80 dB SPL to 15-85 dB SPL[11]. STHR is synchronized with the electromechanical sound dampers in the headphone design

GFL (Gunfire Locator)

![]() →redirect Further information: Gunfire locator

→redirect Further information: Gunfire locator

The preset for detecting the sound of gunfire (small arms, mortar, artillery, rocket artillery) and overcoming the sound barrier by ammunition is always enabled. This feature allows you to determine the course direction of the source and its range by phase shift and triangulation, and also due to the Doppler effect (the sound of a bullet or a projectile). Sound patterns with a clearly defined sound wave front, such as a gunshot, a bump, a bang, a single knock, a sneeze, allow to determine with high accuracy the direction of the sound wave source and range (knowing barometric and climatic parameters for adjusting corrections to the constant speed of sound).

Exiting examples

The EARS® family of gunshot localization systems gives soldiers and military police the situational awareness necessary to respond instantly and accurately to hostile attacks to better protect themselves from snipers and other gunfire threats.[12]

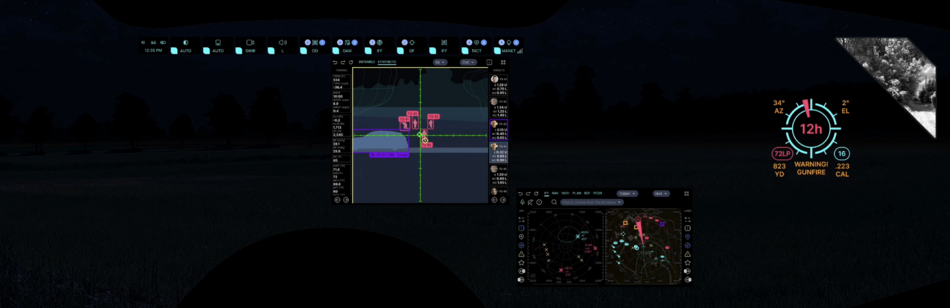

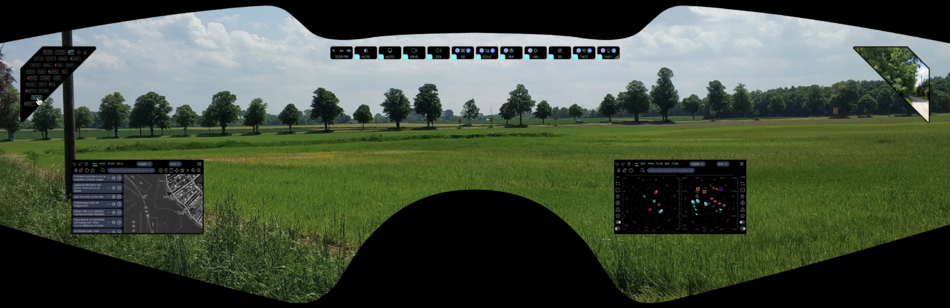

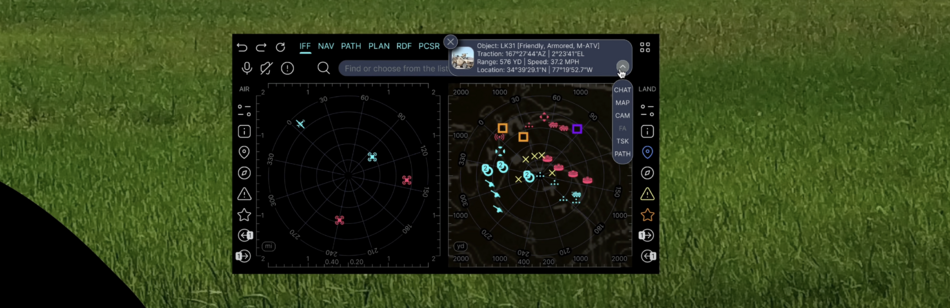

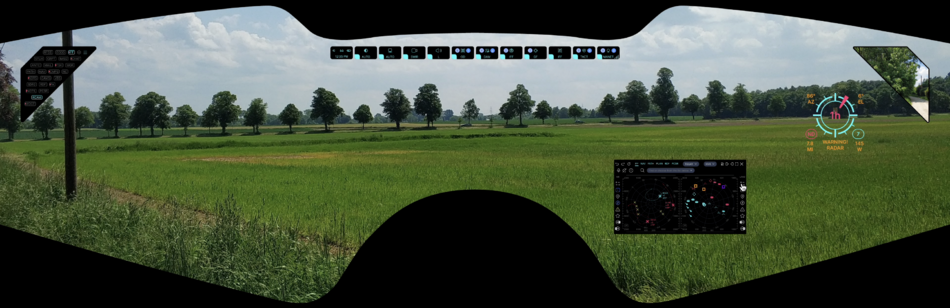

The picture above shows an example of the user interface (click to open in a separate window, and then again to open in maximum quality and maximize):

- a red dot is visible on the compass field on the left side, indicating the azimuth of the shot source (16°)

- below left, a large Gunfire Locator icon is displayed that tells you the direction of the oncoming shot (11h), range to source (331yd), elevation (0°), caliber of ammunition (.300), and number of shots fired (19)

- below left on the navigation grid you can see the localization of the source of enemy fire (GPS coordinates of the target are available to other users as well)

- the same navigation grid shows objects identified with the help of IFF (transponder), SDRS and RDF (radio interception and direction finding), PCSR (passive radar), CVC (computer vision) - to make it easier to use the navigation grid there are layers and filters, as well as pop-up and audible tips

- in the window in the center, this target is not displayed in the priority list for this user because there are users who are closer to the target and for them it will be a higher priority - AI evaluates the probability of crossfire and optimizes targets

Brief of assistance apps functionality

Here we briefly describe the functionality of the apps. You will find a more detailed description of the applications on their main articles pages. See the links below.

FA (Firing Assistance control)

![]() →redirect Main Article: Firing Assistance Control, Night Vision, Digital Sights

→redirect Main Article: Firing Assistance Control, Night Vision, Digital Sights

Features

The application includes:

- capture and track up to 10 targets simultaneously (uses Computer Vision Control)

- ballistic calculator (uses several applications at the same time, including Computer Vision Control)

- aiming assistant for all types of aiming devices

- fire spotter (uses Computer Vision Control)

- target designation history

The application is launched manually as a widget at the top of the CAM app window when one of the FA presets is enabled, at the same time the OD (Computer Vision Control) preset is automatically launched.

Presets:

- GF (Fire on ground fixed targets)

- GM (Fire on ground moving targets)

- AM (Fire on aerial moving targets)

- MF (Mountain fire on fixed targets)

- MM (Mountain fire on moving targets)

Ballistic calculator

The ballistic calculator for each target calculates the ballistic trajectory at a distance based on 20 parameters for a static and moving target (the display can be customized):

- TYPE - weapon type (saved in profile), incl. muzzle velocity (MVmph/kmh), zero range (ZRft/m), bore height / sight hight (BHin), zero hight (ZHin), zero offset (ZOin), SSF elevation (SSFe), SSF windage (SSFw), EclckMOA/MIL and WclckMOA/MIL, rifle twist rate (RT), rifle twist direction (RTd), calibrate muzzle velocity (CalMV), calibrate DSF (Drop Scale Factor) (CalDSF)[13][14]

- AMMO - ammunition type (saved in profile), incl. manufacturer's ballistic coefficient (BC), drag curve / drag model (DM) (G1, G7, custom), bullet weight (BWgr), bullet diameter (BDin), bullet length (BLin)

- TRNGyd/m - target range (no laser rangefinder[15][16])

- SLHft/m/x° - sighting line height, a negative value indicates a downhill shot

- ALTBft/m - altitude barometric, the vertical distance associated with given atmospheric pressure; an accurate reading depends on correct initial barometric pressure input and stable barometric pressure while measuring

- BPinHg/mmHg - barometric pressure, the local station (or absolute) pressure adjusted to mean pressure; an accurate reading depends on a correct altitude input and unchanging altitude while measuring

- RH% - relative humidity

- AIRT°F/°C - air temperature

- DoFx° - direction of fire, course angle to the magnetic pole for the Coriolis effect

- LATx°y'z'' - latitude, horizontal location on the Earth's surface, negative values are below the equator

- WDIRhrs/x° - wind direction from (incl. crosswind, headwind, tailwind - components of the full wind)

- WINDmph/kmh - wind speed (time average, last 2 measurements, manual entry of min and max, last measurement from 2 different impellers, last measurement from external device)

- AIRUPmph/kmh - upward air flow velocity (incl. dynamic upward flow and convection air flow), takes into account time of day, season, temperature, slope curvature and height, measurement history, weather satellite data

- DAft/m - density altitude[17], the altitude at which the density of the theoretical standard atmospheric conditions (ISA) would match the actual local air density

- DPT°F/°C - dew point temperature[18], the temperature at which water vapor will begin to condense out of the air

- HSI - heat stress index, a calculated value of the perceived temperature based on temperature and relative humidity

- SPinHg/mmHg - station pressure (absolute pressure), the pressure exerted by the atmosphere at the location

- WBT°F/°C - wet bulb temperature (psychrometric), the lowest temperature that can be reached in the existing environment by cooling through evaporation, wet bulb is always equal to or lower than ambient temperature.

- WCH°F/°C - wind chill, a calculated value of the perceived temperature based on temperature and wind speed

Displays 16 ballistic calculation reference data (display can be customized):

- ToFs - time of flight

- RemVfps/mps - remaining ammo velocity

- Mach - remaining ammo velocity in Mach units

- Rtrnsft/m - transonic range is the distance traveled by the bullet before it slows to transonic speed (Mach 1.2)

- Rsubft/m - subsonic is the distance traveled by the bullet before it slows to subsonic speed

- RemEft-lbf/J - remaining energy

- SpnDMOA/MIL L/R - spin drift (gyroscopic drift)

- CIA - cosine of the inclination angle to the target

- DroMOA/MIL - ammo true drop, the total drop the bullet experiences from its highest point in flight

- Trcein/cm - trace is the height above the elevation solution where the trace of the bullet will be most visible

- MaxOin/cm - maximum ordinance, height above the axis of the barrel that a bullet will reach along its flight path

- MaxORft/m - range at which the bullet will reach its maximum ordinance

- AerJ - aerodynamic jump is the amount of the elevation solution attributed to aerodynamic jump

- VCorMOA/MIL U/D - vertical Coriolis effect is the amount of the elevation solution attributed to the Coriolis effect

- HCorMOA/MIL L/R - horizontal Coriolis effect is the amount of the windage solution attributed to the Coriolis effect

- SPDmph/kmh - the speed of a moving target, a negative value indicates a target moving leftAdditionally for other presets:

- additionally for presets GM and MM calculates the angular velocity for uniform target movement, measurement of the distance to the target, the course of the target movement and indicates corrective lead corrections

- 20. LEAD MOA/MIL L/R - the horizontal correction needed to hit a target moving left or right at a given speed

- additionally for the AM preset, it calculates the angular velocity, the change in azimuth and determines the nearest probable trajectory with the designation of corrective lead corrections

- additionally for the MF preset, takes into account more complex parameters for calculating a ballistic trajectory in mountainous and desert areas based on landscape assessment, a steeper line of sight (sightline hight), temperatures, estimates of air updrafts and mirage effect, shot history

- for the MM preset as above plus additionally calculates the angular velocity and indicates the lead corrections

Using the ballistic calculator

- the user aims the reticle crosshairs (point of impact) at the intended target and locks the target manually, or the target is locked automatically using Computer Vision and the list of targets is displayed at the bottom of the horizontal list

- the current target is in the middle, on the left - the history of target designations, on the right - the next targets

- the name of the targets has a color in accordance with the rules of the IFF app

- if several targets are displayed in the window at the same time, they are marked with numbers, as in the list of target designations below

- the user sees in the interface and can make manual changes (input field) to the parameters of the ballistic calculation

- the application displays the aiming point (inner diameter 2MOA, outer diameter 5MOA) taking into account corrective amendments on the reticle relative to the crosshair with a scale in the form of digital data (Eunit and Wunit) MOA/MIL (true minute of angle/miliradian)

- separately displays the numerical values of the corrections: Elevation and Windage:

- EU/D and EclckU/D

- W1L/R - average measured wind speed and W1clckL/R

- W2L/R - maximum measured wind speed and W1clckL/R - so that you can manually make these corrections for the telescopic sight (the user must input the number of clicks needed to adjust the point of aim one TMOA or Mil, based on the turrets of their scope)

Using the aiming assistant

- when using Red Dot Sights or Holographic Sights (both eyes open), the user visually combines:

- target outline

- position of the aiming point (subject to amendments)

- sight dot (Red Dot diameter typically 2-5 MOA is the same as the size of the aiming point of the application) thus shifting the sight dot (line of sight) to hit the target at the crosshair point (point of impact) as on the screen of the device[19][20][21]

- when using non-digital sights, the sight glass (reticle/dot) must be raised about 2.5 inches above the eye (using an adapter to the standard picatinny rail) so that the line of sight matches the line of vision of one of the two cameras

- if the sight does not have a night vision function, it is recommended to use camera modes: SWIR, SLV, MXV2, MXV4

- if the sight has a night vision function, it is recommended to use the FHD camera mode

- if desired, the user can additionally turn on the adjacent central screen (CLW or CRW) with a different camera mode

- when integrated with a digital sight, the application immediately displays on the screen in the form of a yellow diamond the sight line of the digital sight, which must be aligned with the displayed aiming point

- default colors: reticle - black, aiming dot - azure, digital sight line of sight - yellow

- colors are user configurable for ambient light level and camera mode

- if the lower part of the mask is used, then its protruding elements (air filters) will interfere with the standard putting the rifle to cheek while aiming, at the same time, when using an adapter to increase the height of the sight, with the usual butt position with emphasis on the shoulder and the vertical position of the rifle, more comfortable head posture with less neck bending during firing

Fire spotter

- evaluates the shot by sound and compares the dynamics of the image within the radius of the crosshair

- additionally, the voice assistant asks the user to confirm that the target has been hit in order to determine whether to proceed to the next target or make an adjustment

- evaluates ballistic calculation inaccuracies in case of a miss and offers the user corrective corrections

- takes into account the history of shots and provides quick access to the history for the user

Circular Error Probable

In the case where the hit offset of the ammo is not known for correction, the strategy of the battleship paper game is applied. It is assumed that the aiming was done exactly in the center of the rhombus (aiming point), then depending on the weighting factors of the ballistic calculation, the offset from the primary aiming point is calculated depending on the history of the CEP (circular error probable). For example, the most influential factor is wind, for the second attempt for the offset this factor will be selected as a priority. This hypothesis should be tested experimentally.

Types of weapons and ammunition

- no restrictions for saving weapon and ammo profiles (unlike other manufacturers for different models)

- suitable for all types of small arms and grenade launchers where a ballistic trajectory is used (without jet thrust and gas generator)

- the capability of using the function for ballistic calculation and use for mortar fire is being investigated

- AM (Fire on aerial moving targets) preset is designed primarily "as an anti-aircraft gun on the ground" against drones at altitudes up to 1000 yards and with increased range against aircraft at altitudes up to 2000 yards including machine guns M2E2 / M2A1[22][23], M240B / M240L / Barrett 240LW[24], M249[25], M134 Minigun[26], ХМ250[27], MG5[28], M27[29][30]

- the capability of using for firing from a helicopter is being investigated

- the capability of using the function for remote control of MANPADS (a man-portable air-defense system)[31] (RBS 70/90[32], Javelin[33]) on a robotic turret - Lightweight Multiple Launcher (LML) is being investigated

Options and integrations

- Continuous Wind Capture (perspective function) at the top of the mask, it is possible to install 2 compact impellers on a turntable (increased accuracy in measuring wind speed and direction, hands free), which unfold by a spring mechanism and lock when folded, measurement intervals are set by the user

- for ballistic calculation at distances up to 5500 yards, integration with a portable weather station via Bluetooth is possible, for example, Kestrel 5700X Elite with LiNK[34][14][35] (LiNK Wireless Dongle connected to the mask top USB port), Garmin Foretrex 701 and GARMIN tactix Delta Solar Edition[36][37]

- as an option, other anemometers can be used to measure wind speed and direction, or the measured data of one user can be transmitted by other users within the P2P network

Related services

- when using digital sights, the sight line may be outside the field of view of the device's camera with a given magnification - in this case, thanks to the sensors of the digital sight, indicators appear on the screen in which direction the sight line needs to be shifted

- a list of detected and hit targets is entered as an auto task with characteristics and photo confirmation for further analysis

- all shots can be automatically entered as a completed auto task for greater detail

- operational history of shots is available in the app window

- detailed shot history available in the TSK app

- the FA app can track the ammunition stock and notify the user, as well as to the BMS (Battlefield Management System) analytical center, about the current stock of ammunition

- in accordance with the additional terms of reference, the algorithm, when capturing targets, can independently set priorities for targets

Capability to make fire corrections at night and hit small low-flying aerial vehicles

At dusk and at night (also dirung the day), in fog, smoke and dust, and when shooting at aerial targets, it is advantageous for the sniper and machine gunner, as well as any other shooter, to use tracer bullets, which, however, demask the shooter. Without the use of tracer bullets it is impossible to make corrections. To hit small low-flying aerial vehicles at an altitude of 60 ft or more in darkness, smoke, dust, fog, bad weather conditions is a very difficult task, especially if it is a small drone less than 8 in. To help comes mixed vision in HDR / SWIR / LWIR range, which help to track the target more clearly and see the trajectory of hot bullets in the long-wave infrared range.

The resolution of the Lepton 3.5, 160x120px, 57°, with shutter LWIR sensor makes it impossible to see a sharp image, much less use a digital zoom. Therefore, folded design technology for camera lenses solves this problem. For example, an area of the sky can be optically zoomed in and capturing the image without zooming to the sensor with additional post-processing by means of Computer Vision allows you to see the contours of the object for reliable aiming. CPU load balancing is also necessary in this case in order to process streaming video at a sampling rate of at least 240 fps. This will allow you to see the trace of fast-flying (660-900 meters per second) bullets and projectiles to be able to correct aiming. The video capture model is as follows. The image at 240 fps arrives at the LWIR sensor, bright pixels are processed by the preprocessor, then encoded (a temporal compression) at 30 or 60 fps, preserving motion details. Images on other HD, HDR, SWIR matrices are captured initially at 30 or 60 fps. The preprocessor performs tasks of object extraction (a sampling) by means of Computer Vision at a rate of 6 fps and then object detection. Then postprocessor performs layer-by-layer addition of video streams and real-time (30-80 ms delay) processing at 30 to 60 fps for playback in the interface window: source signal + fire trace image + Computer Vision markers.

See more About video and audio encoding and compression[38]

Machine Learning tools are also applied to effectively engage an aerial target. Namely, a probabilistic preemption model is calculated for target designation when firing at complex trajectory aerial targets.

Both functions of this technology can destroy aerial targets in the form of micro, mini, medium and large drones at altitudes up to 1-2 miles.

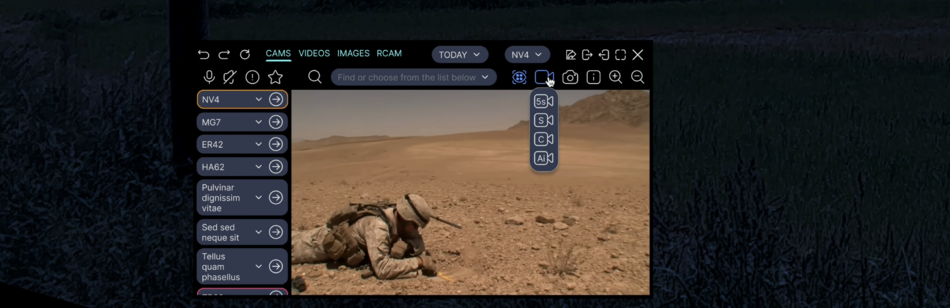

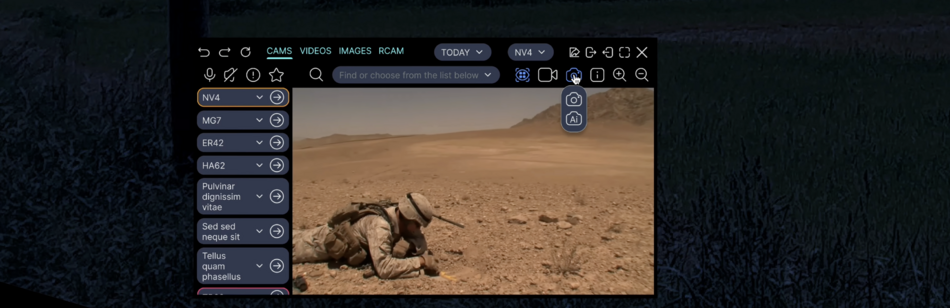

Interface examples

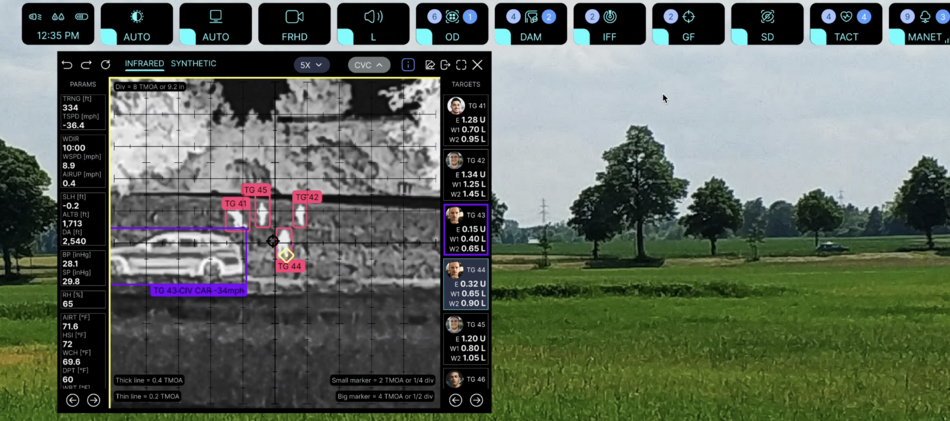

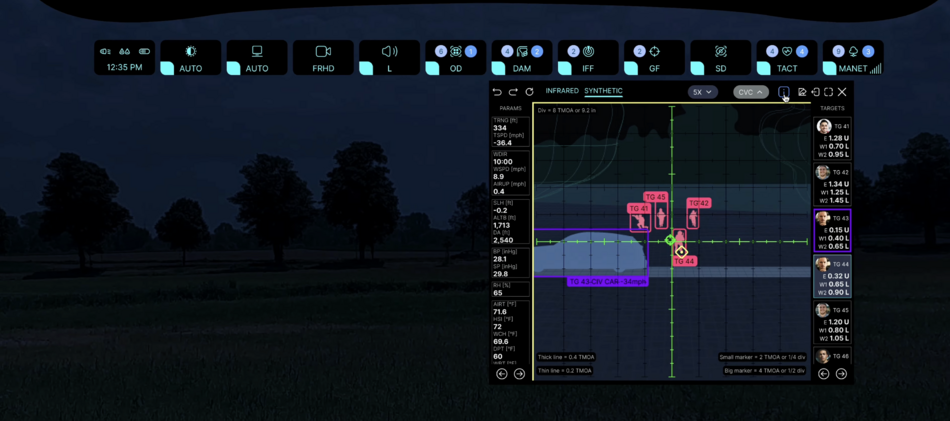

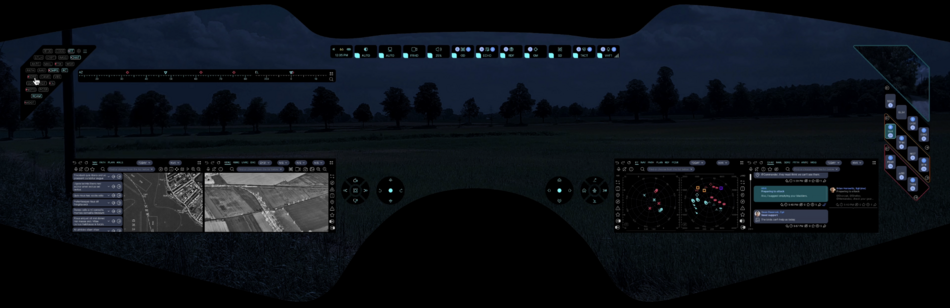

The picture above shows an example of the user interface (click to open in a separate window, and then again to open in maximum quality and maximize):

- the window in the center displays the search and target designation view of the FA system

- SWIR camera sensor view, 5x zoom, reticle with MOA, reticle size of one reticle division 13.29 in is enabled for this display

- actual ballistic calculations are displayed on the left and right sides of the screen

- bottom displays captured targets in individual sequence for each user - target priority is formed by AI based on such parameters as: user's proximity to the target, crossfire avoidance, target type and armor protection, target speed, user's ammunition stock and type, type of available weapons

- Fire Assistance will assist with target capture and aiming, while AI will allow smart target distribution (target selection and prioritization, fire correction) between multiple users, so that you don't try to hit one target at the same time, while no one is trying to hit another target at the moment

- targeting correction values are shown at the bottom for each target

- targets are displayed in color according to the IFF classification (in this case, the user can see that the targets are civilian objects, and this is also indicated by the large icon on the right "Warning! Civilians")

- objects in the search and target designation window are identified by the CVC (Compter Vision Control) system - the user can select an object of interest and get more detailed information in the context window on the right side of the screen

- the aiming line including corrective adjustments is shown in the form of a yellow rhombus

- the aiming line of the digital sight is indicated in the form of an azure circle with two crosshairs

- CVC system tracks the hit on the target by characteristic signs (point of entry, dust cloud, deformation, spark, etc.), as well as tracking with LWIR sensor image capture technology at high frequency (trajectories of hot bullets and projectiles) - in case of an inaccurate hit in case of precise aiming, corrective corrections are calculated again; in case of inaccurate aiming - remain unchanged;

- shot history for each target is stored in memory and displayed as a static image with a cloud of hit points

Facts. US forces have fired so many bullets in Iraq and Afghanistan - an estimated 250,000 for every insurgent killed - that American ammunition-makers cannot keep up with demand.[39]

Since WW1, WW2, Korean War, Vietnam War, operations in Iraq, Afghanistan and Syria, the amount of ammunition expended per target hit has been increasing. This is primarily due to harassing fire tactic, increased rate of fire, and increased fire density. Plus ammunition expended during training and lost during transportation. Whereas in the case of artillery ammunition stats 8-11 artillery shells per killed enemy. To hit 75 percent of the targets in an equipped enemy platoon position, 1,250 high-explosive shells are required. This equates to 60 rounds per person. The main development goal is to increase the proportion of guided munitions, in the case of small arms the main goal is to increase accuracy through training and electronic assistance.

SPOT (LED Spotlight settings)

![]() →redirect Main Article: LED Spotlight

→redirect Main Article: LED Spotlight

Features

The application starts when you turn on one of the modes in the SB (Status Bar). Does not start by default.

Capabilites:

- lighting in the near radius up to 10-30ft in front of the user with different intensity depending on the tilt of the head (super bright LED with reflector located at an angle of 45°)

- strobe mode[40][41]: used as Strobe Weapons[42][43][44][45] - effectively turn off the enemy's peripheral vision, cause disorientation and indecision, allowing the user to change position; do not allow the enemy to accurately aim and conduct effective return fire - during an assault in twilight and darkness, fading mask screens and camera visibility protect military personnel, allowing you to win a second, two or even more enemy inactivity

- SOS mode: used to be able to locate the user for medical assistance and evacuation

- when the full stealth mode FULL (STM) is on, the user will not be able to turn on the flashlight

- in power saving mode, the intensity will be reduced automatically or automatic shutdown will occur

- fine adjustments are made in the LED Spotlight settings - SPOT app

Interface examples

Insert picture for example

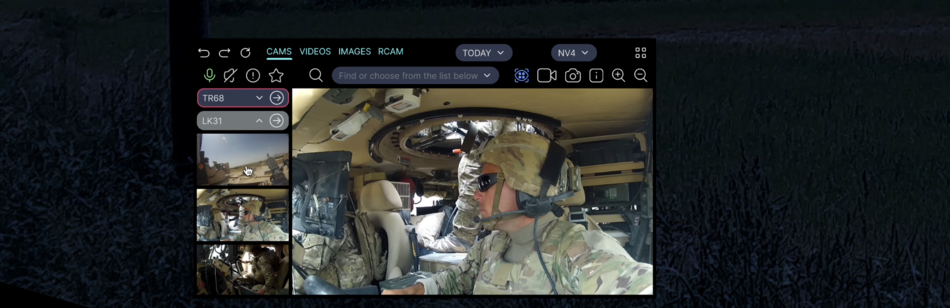

RPAC (Drone RC)

![]() →redirect Main Article: Drone RC

→redirect Main Article: Drone RC

Features

- remote control of different models through a single built-in controller (land, waterborne and aerial drones)

- interception of control of enemy drones (premature activation of ammunition drop, forced landing)

The application for remote control of RPA, UAV in accordance with the communication protocol and functions provided by the manufacturer for integrators.[46] The principle of FPV (First Person View) is used, when the drone operator uses a screen located on his head. Includes manual remote control via RF signal, automatic commands of this model, AI remote control model. Integration is configured in the menu section of the DVC app.

Streaming video and photos are transmitted over a radio channel (4.2-6.8 GHz) and recorded on a solid-state drive (SSD).

The software algorithm allows to avoid collision with obstacles.

The application window additionally uses CVC (Computer Vision Control) functions to detect and recognize objects, movement, people, face recognition, flashes, smoke and heat (if the drone's camera has an LWIR sensor) trace. Additionally, a software zoom in and zoom out with image interpolation is used to improve the quality and highlight the contours. Detected objects are displayed below, indicating their type (car, tank, helicopter, drone, person, etc.), speed, azimuth, altitude, GPS coordinates, course destination and size.

Multiple users can receive the drone's camera image. Drone control can be transferred from one user to another, incl. can change the source of the RF signal and the characteristics of the "home" for the drone during the flight.

The application is started manually.

Joystick and buttons control

The software joysticks located below the central windows are used. 2 fading pads, left and right: CWJP and CWBP. 2 joysticks and 10 buttons, incl. 2 buttons are multifunctional. The left area is responsible for controlling the altitude (Z), rotation around the vertical Z axis, camera tilt, zoom in and zoom out, camera modes. The right area is responsible for movement in the horizontal plane (X and Y), take-off, landing, automatic return (to the RF signal source or to the take-off geo-position), automatic following mode (following the RF signal source, following the user, following the specified object).

To control software joysticks, the HT (Hand Tracking System) app is used .

Remote control is carried out using the navigation reticle in the app window. When using only one surveillance camera, flight control can only be carried out according to navigation indicators. Key pointer:

- pitch

- roll

- horizon line

- course (azimuth)

- altitude

- speed (horizontal, vertical)

- distance from home (signal source or user)

- battery charge

- received and transmitted RF signal level (Rx, Tx)

- GPS signal level

- camera tilt angles

- camera mode and shooting options

- free space on SDD

Head tilt control with one joystick

To control the altitude (Z) and rotate around the vertical Z axis, the user can use the tilt of the head forth or back and turn the head left and right. After returning the head to its original position, the joystick position is 0.

To control along the X-axis (drone movement to the right and left), the user can use the left-right head tilt. To control along the Y-axis (moving the drone forward and backward), the user can slightly move the head back and forth.

The user can set up part of the control functions using the built-in joystick and buttons A, B, A+B, C, D, C+D, +/- on the left, +/- on the right.

Control with voice commands

With the TESS voice assistant, the user can remote control all functions with their voice.

Complex commands and automatic tasks

- Start mission, Pause mission, Stop mission - autonomous execution of the mission set through the NAV app (the control RF signal is not transmitted so as not to unmask the user; the RF signal from the drone with the data stream can also be turned off)

- Follow me, Follow target - following the user or the detected target at a safe (variable) altitude (control RF signal is not transmitted)

- Circle around me, Circle around target - move around the circle while keeping focus (control RF signal is not transmitted)

- other commands and AI control options

Integration

- configurable in DVC app

- drone categories: tricopters, quadcopters, hexacopters, octocopters, fixed wing drones

- drone classes: micro, mini, medium, heavy, super heavy

- ammunition drop system (most often, unguided hand grenades and mini-bombs)

Open source examples

Interface examples

Facts.

RBRC (Robot RC)

![]() →redirect Main Article: Robot RC

→redirect Main Article: Robot RC

Tasks Summary

| Missions | Fire Team | Force Recon - ISR | Light Armored | Scout Sniper | Low Altitude Air Defense | Helicopter - RPA | Artillery -Mortar | Combat Engineer | Flight Deck - Hull | Special Ops | SIGINT - EW | Medical Service |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Intelligence (INT) -

Surveillance (SURV) - Reconnaissance (Recon) |

• Ambushes• RECON• SIGINT | • Ambushes• RECON• SIGINT | • Ambushes• RECON• SIGINT | • RECON• SURV• SIGINT | • SURV | • | • Crew Suppport (charging, cleaning) | • | • | • Ambushes | • SIGINT | • |

| Direct Action (DA) | • Forced entry | • Forced entry | • Forced entry• Gunfire Spotting | • Gunfire Spotting | • | • | • Gunfire Spotting | • | • | • Forced entry | • | • |

| Communication | • | • | • | • | • | • | • | • | • | • | • | • |

| Counter-Terrorism | • | • | • | • | • | • | • | • | • | • | • | • |

| Counter-Narcotics | • | • | • | • | • | • | • | • | • | • | • | • |

| Explosive Devices | • EOD• IED• Booby-Trapped Areas | • | • | • EOD• IED• Booby-Trapped Areas | • | • | • | • EOD• IED | • | • | • | • |

| Hostage Rescue | • | • | • | • | • | • | • | • | • | • | • | • |

| Combat Rescue | • | • | • | • | • | • | • | • | • | • | • | • |

| Casualty Evacuation (CASEVAC) | • | • | • | • | • | • | • | • | • | • | • | • CASEVAC |

| Transportation | • Carrying• Machine Gun | • Carrying | • Carrying | • | • | • | • | • | • | • Carrying | • | • |

| Site Security | • Patrolling | • Patrolling | • Patrolling | • | • | • | • | • | • | • | • | • |

| • | • | • | • | • | • | • | • | • | • | • | • | |

| • | • | • | • | • | • | • | • | • | • | • | • | |

| Detainee Riots | • | • | • | • | • | • | • | • | • | • | • | • |

Features

Universal Controller - High Definition (UC-HD) is a common controller that commands safety-critical unmanned assets such as large, high-speed unmanned ground vehicles (UGV) and unmanned aerial systems (UAS).

UC-HD has been developed with the wealth of knowledge in controlling large, high speed, and/or safety critical unmanned systems. UC-HD is compatible with existing, fielded route clearance unmanned ground vehicles (U.S. Army REF Minotaur), medium sized unmanned ground vehicle (TALON®), small unmanned ground vehicles, and Group 1 UAS platforms. UC-HD is compliant to emerging program requirements.[49]

Benefits:

- ARHUDFM Embedded

- Intuitive Controls

- Modular

- Adaptable

- Reconfigurable

Interface examples

FTRC (Fire turret RC)

![]() →redirect Main Article: Fire Turret RC

→redirect Main Article: Fire Turret RC

Features

Interface examples

UVRC (Unmanned vehicle RC)

![]() →redirect Main Article: Unmanned Vehicle RC

→redirect Main Article: Unmanned Vehicle RC

Features

Interface examples

VM (Virtual mentor)

![]() →redirect Main Article: Virtual Mentor

→redirect Main Article: Virtual Mentor

Features

The VM app is started manually as the VM tab of the MMD app group window (other tabs: REC, PLAY, TRSL). The application is necessary for cases of remote more qualified assistance, monitoring the training process and correcting errors in real time. A video conferencing app with two or more persons, including different networks, which allows to remotely exchange data streams with video, voice, text and file sharing. Since the user is not in front of the camera, instead of a video with him in the app window, the following are used:

- user camera view

- drone camera view

- user static avatar (photo)

- user AI-based virtual avatar (like in Apple Vision Pro, Synthesia and many other apps)[50][51]

Where it can be used

- Care Under Fire

- remote mentoring by one or more qualified physicians

- repair and maintenance mentoring

- instructor during training and tactical exercises in L-STE (Live-Synthetic Training Environment)

- personal mentoring during field tactical exercises

Open source examples

Interface examples

Insert picture for example

TRSL (Translater)

![]() →redirect Main Article: Translater

→redirect Main Article: Translater

Features

The application is started manually. Translation in both directions, incl. converting of the user's voice into text > translation into the language of the enemy or civilians > playback in the target language through the loudspeaker and headphones.

AI-based text translation app.

Open source examples

- DeepL

Interface examples

Insert picture for example

NOTS (Notifications)

![]() →redirect Main Article: Notifications

→redirect Main Article: Notifications

Features

The app starts in startup mode. The app shows and sounds other applications' notifications and system messages to the user.

Open source examples

Interface examples

Insert picture for example

TIPS (User tips)

![]() →redirect Main Article: User tips

→redirect Main Article: User tips

Features

The app starts in startup mode. The app is based on machine learning and incorporates best practices from many users. Depending on what applications the user is using, this app helps to save valuable time and make the best decision considering many environmental factors, mission, remaining resources, available threats, and availability of resources from other team members or other domains. Works autonomously without constant connection to BMS, L-STE systems. AI-based app.

Open source examples

Interface examples

Insert picture for example

VBS (Vitals Body sensors control)

![]() →redirect Main Article: Vitals Body Sensors Control, Body Sensors

→redirect Main Article: Vitals Body Sensors Control, Body Sensors

Features

The application includes:

- continuous monitoring of 7 groups of vital signs

- warning system about the presence of dangerous and critical conditions of the body

- user notification system, incl. recommendations

- monitoring log

- Care Under Fire system[52][53]

- Resuscitation on the Move system[54][55]

The application runs in the background when you turn on one of the presets and is available in the RQM (Right quick menu). Does not start by default.

Presets:

- WRK (Workout mode)

- displays tips for the user in case of many unwanted risks: bradycardia, tachycardia, arrhythmia, hypothermia, hyperthermia, hypoxemia, hyperventilation, hemorrhagic shock, etc.

- when a dangerous level of indicators occurs, displays a message to the user and sends an SOS message to the BMS (Battlefield Management System) analytical center

- keeps a log of monitoring states

- TRN (Training mode)

- additionally takes into account the duration of the session of training exercises and the capability of live fire training

- applies a programmatically set training mode for the use of automatic tourniquet

- TACT (Tactical mode)

- in addition to the above, if a dangerous level of acute massive blood loss occurs in one of the 8 sites protected by automatic tourniquets, it controls the "Care Under Fire" task for hemostatic and start emergency automatic infusion therapy (fluid resuscitation)

- if there is a risk of ventricular fibrillation, it automatically starts the defibrillation procedure under ECG control

- if necessary, start automatic pulmonary resuscitation using a pneumatic pump built into the design of air filters

- OFF

- does not monitor

When selected via RQM (Right quick menu), VUI (Voice user interface) via voice assistant, LMM and RSB (Left main menu and Right submenu), the application opens in a separate window showing real-time monitoring data, log history and log of messages and notifications.

The app:

- allows you to set up presets and integration with sensors through the DVC app

- allows accurate monitoring of vital signs in any position and on the move

- based on Machine Learning algorithms and medical scientific data, it assesses the presence of dangerous and critical conditions, in the event of which it sends messages and independently controls medical devices (automatic tourniquets, difibrillator, fluid resuscitation, pulmonary resuscitation)

- applies other AI methods

Monitoring

Vitals body data-driven monitoring including:

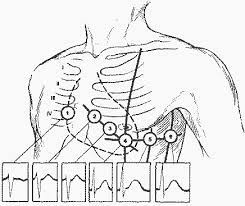

- HR bpm (ECG)[56] - assessment of rhythm and heart rate (pulse) in 12 leads, measured in 3 places on each arm and leg (sensors are built into 12 cuffs), as well as in 6 places on the chest (18 ECG electrodes instead of 10)

- NIBP mmHg (ART) - blood pressure (systolic / diastolic) non-invasively using 4 cuffs of the upper arm or thigh

- TEMP - body temperature (sensors are built into 12 cuffs)

- SpO₂ % - non-invasive method for monitoring the level of oxygen saturation in tissues (sensors are built into 12 cuffs); tissue oximetry method is based on the absorption by hemoglobin (Hb) of light of two different wavelengths (660 nm, red and 940 nm, infrared), which varies depending on its saturation with oxygen; the light signal, passing through the tissues, acquires a pulsating character due to a change in the volume of the arterial bed with each heartbeat; the pulse oximeter uses only light radiation and therefore is completely safe and has no contraindications

- ETCO₂ % - (capnometry) to measure the level of carboxyhemoglobin (COHb) and methemoglobin (MetHb), measured by the CO₂ sensor built into the mask airway obturator

- RESP bpm (RR) - Respiratory Rate, measured by a sensor built into the mask airway obturator

- HYDRL - using optical sensors built into the drinking system determines the amount and frequency of hydration by the user

- Glasgow test[57] to assess the degree of shock

In contrast to monitoring patients in the clinic, this sensor system provides for monitoring while standing, sitting and on the move, in the event of an emergency for an effective resuscitation process and first aid, incl. with loss of limb and acute bleeding. Therefore, it is important to get information from more sensors in different places in order to monitor.

The most important goal of developing this subsystem within the ARHUDFM platform is to monitor the physical and psychological state of military personnel during intense physical training, field exercises and tactical missions. The primary task of the subsystem is to timely detect dangerous and critical conditions in order to report them to the BMS (Battlefield Management System) analytical center, other nearby users and the user himself. The second most important task is the immediate "Care Under Fire" in critical conditions, if medical assistance cannot be provided in a timely manner within the first minutes. The third most important task is to assist rescue teams in the search for the victim and his evacuation.

Continuous monitoring capabilities

ECG in 12 leads, monitoring of body temperature, respiratory system and drinking system allows to:

- determine the degree of fatigue and dehydration

- stress levels and shock

- identify limit levels and duration of exercise that is safe for health

- identify random anomalies, arrhythmias, latent diseases or the initial stages of diseases

- timely diagnose crisis medical symptoms

- instantly respond to blood loss and give a command to trigger one or more (8) limb auto tourniquets

- immediately respond to cardiac arrest[58] (more precisely, to ventricular fibrillation (95% of cases) and heart failure in the presence of bioelectrical activity, causes hypoxia) and immediately begin resuscitation, including defibrillator discharges of different power (a short high-voltage pulse that causes a complete contraction of the myocardium)

- start and control automatic pulmonary resuscitation with the help of 2 pneumatic pumps built into the design of the air filters

- start and control automatic emergency injection (worn behind the back in an elastic vest) of an infusion solution (Fluid Resuscitation) through a pre-implanted venous port-system (for example, into the right subclavian vein, for several years, up to 2000 punctures with a special needle)[59]

- instantly send an alert message for help within the unit, domain and between domains, including position and vital status, incl. when identifying the approach of the evacuation group, it turns on the light mode "SOS"

Optional extension of the monitoring system

Optionally, the monitoring system can be supplemented and is capable to automatically control:

- 8 automatic tourniquets on the shoulders, forearms, thighs and shins to stop bleeding under ECG control

- magnetic mixing valve for automatic fluid resuscitation in acute massive blood loss

- ventricular defibrillation under ECG control

- pulmonary resuscitation under the control of several sensors simultaneously

Sensor system and built-in defibrillator

The sensor system is integrated via Bluetooth and includes installation locations (cuffs):

- 2 wrists (including checking the pulse when the auto tourniquet is started higher)

- 2 forearms (including auto tourniquet)

- 2 arms (including auto tourniquet)

- 2 ankles (including checking the pulse when the auto tourniquet is started higher)

- 2 shins (including auto tourniquet)

- 2 thighs (including auto turnstile)

- a perforated elastic chest belt with one strap over the right shoulder includes built-in reusable electrodes of the Holter ECG monitor in 12 leads[60][61] and at the same time a built-in difibrillator (analogous to WCD / ICD[62][63]):

- 4th intercostal space on the right side of the sternum

- 4th intercostal space on the left side of the sternum

- 5th rib along the left parasternal line

- 5th intercostal space along the left line of the middle clavicle

- 5th intercostal space in the axillary line in front

- 5th intercostal space in the mid-axillary line

Automatic stop of acute massive bleeding (auto tourniquets)

- the tourniquet is applied in case of massive bleeding according to the "high and tight" principle

- if it's not done in a timely manner, a person can die within 2-3 minutes

- after applying the tourniquet, the victim should be examined by a physician as soon as possible

- a person can wear the tourniquet without loosening, safely up to 2 hours and relatively safe from 2 to 6 hours

- the tourniquet must not be loosened, it is dangerous for health and life, because loosening the tourniquet can lead to the resumption of critical bleeding

- bleeding when applying a tourniquet stops as a result of muscle compression

- critical bleeding is not divided into arterial and venous, the tourniquet is always applied above the wound

- places for installation of auto tourniquets: shoulder, forearm, thigh, shin - in each case in the upper part

- limb circumference / tourniquet width x 16.67 + 67

- it is necessary to control the absence of weakening every 5-10 minutes. automatically measuring pulse and limb temperature on both sides of the tourniquet

- references - original tourniquets CAT, NAR, LEAF[64]

Automatic fluid resuscitation

Fluid resuscitation is performed with a magnetic mixing valve and a spring pocket for infusion bags that pressurizes the fluid. Fluid resuscitation is required in case of acute massive blood loss under BP and ECG control, e.g. more than 20% of circulating blood volume[65], according to the TASH (Trauma Associated Severe Hemorrhage) score scale[66][67], through pre-connected via a port-system to the right subclavian vein, using infusion bags with plasma substitute ("small volume resuscitation"), anticoagulant and possibly other solutions (all small volumes, fluid resuscitation depends on the complex of vital signs and the individual reaction of the organism).[68][69]

Automatic external defibrillation (AED)

VBS app will instantly evaluate several parameters and, if necessary, automatically stop blood loss in the limbs, and immediately start other types of "Care Under Fire" - pulmonary and cardiac resuscitation.[70]

Because the time between cardiac arrest and defibrillation is directly related to survival, a therapeutic shock must be delivered within minutes of the event to be effective. With every minute without treatment, the patient's chances of survival are reduced by 7-10%.

Shockable rhythm, unconscious patient, circulation ineffective:

- ventricular fibrillation

- ventricular tachycardia without pulse

"Synchronized" rhythms, the patient is often conscious but hemodynamically unstable (ECG-controlled, synchronized with the R wave)[71]:

- atrial fibrillation

- atrial flutter

- atrioventricular nodal reentrant tachycardia (AVNRT)

- ventricular tachycardia with pulse (patient is awake, stable blood pressure)

The user is alerted to the start of a treatment sequence, for example, by beeper signals and voice information. By simultaneously pressing the two response buttons on the monitor, the user can prevent unnecessary shocks while he is conscious. If the user does not respond, for example, because the user has lost consciousness due to arrhythmia or ventricular fibrillation in anticipation of cardiac arrest due to circulatory arrest, the gel is automatically ejected from under the defibrillator electrodes.[62][72]

Main causes of heart failure

The main causes of pulmonary and heart failure, acute tachycardia and life-threatening conditions[73][74]:

- contusion of internal organs

- blunt trauma and concussion of the heart and lungs

- acute bleeding more than 150 ml/min (decrease in systolic blood pressure less than 90 mm Hg and an increase in heart rate over 110 beats per minute with blood loss over 1500 ml / 30%, respiratory rate 20-30 breaths per minute, excited state)

- state of shock

Pulmonary resuscitation

Pulmonary resuscitation[75] (artificial ventilation of the lungs without intubation - CPAP, continuous positive airway pressure) is used with a small inspiratory volume (6-7 ml per 1 kg of body weight) with a moderate positive end-expiratory pressure (up to 5 mbar), especially in patients with traumatic bleeding, is required due to risk of developing respiratory distress syndrome.

How this system is implemented:

- the lower part of the mask contains 2 housings for HEPA-14 or ULPA-15 air filters, which, with a large area of filter material, creates resistance to air movement that a person does not feel even with a large volume of lung chambers and rapid breathing (for example, during intense exercise)

- in the pre-filter zone there is also a filling of activated carbon and the pre-filter itself

- together this filtration system protects the user with 99.9995% efficiency against dust, powder and many other harmful gases, aerosols, bacteria and viruses, and optionally against carbon monoxide (CO)

- centrifugal fans with electric permanent magnet synchronous motors (PMSM) with high torque (similar to those used in quadcopter rotors, medical and lab equipment) can also optionally be installed in the internal cylinders of the filter housings - the design turns into 2 fairly powerful and flexibly controlled ventilation devices with good performance (up to 30 l/min, up to 105 kPa, breath duration 1.2-4.4 s, alternate operation of two air pumps) [76]

- in case of a critical situation of respiratory arrest, instead of a medical air bag, which must be pumped manually by another person, the system will automatically start the electric motors, which, in turn, under the control of the ECG, pulse oximeter and other sensors, will provide the unconscious user with artificial lung ventilation for a long time

- filter system inlet check valves and double sealing (mask airway obturator and face seal) allow such a system to work efficiently

- the exhaust check valve, when the system is activated, uses a magnetic lock to prevent the loss of the created overpressure in the mask airway obturator at the moment of breath in

- of course, we are interested in the opinion of medical experts in the field of resuscitation, but technically we see no obstacles to implementing such a system - the history of the development of the problem of forced ventilation of the lungs and survival statistics prove this with facts

Perforated elastic chest belt

Includes pockets to accommodate (optional):

- front 6 electrodes with automatic ejection of electrically conductive gel (or reusable adhesive gel electrodes)

- 2 rear and 1 front automatic difibrillator electrodes (also with manual triggering for assistance)

- connecting cables

- 2 in the back to accommodate additional power supplies (4x12, max 48pcs LiMgCoAl 26650 3.7V 5500mah, ~ 1,065.6wh, 9.9 lbs / 4.6 kg)

- 1 infusion bags with plasma substitute 500-750 ml (at 2.14 ml/kg/h[77] equals 3-4 hours before arrival at the hospital)

- 1 or 2 infusion bags with anticoagulant and possibly also with other solutions, less than 100 ml

- connection system for automatic infusion therapy, connected to a port system previously installed in the right subclavian vein (under the skin)

- 1 drinking water bag for built-in drinking system, 3-4 lbs

Total weight without additional power sources and drinking water - less than 3 lbs

The use of the ARHUDFM platform reduces the overall weight of the gear by approximately 2 lbs.

Facts for comparison:

- we expect to at least halve the mass of additional power supplies (9.9lbs, 1.1kWh) within 3-4 years as battery technology advances

- technologies for cardiac monitoring, health monitoring using sensors, resuscitation on the move have been used for more than 10-20 years - with the help of modern components and technologies, we are capable to make them much more compact and lighter in order to give the military an additional chance to survive in a potentially extreme situation

- projected price of ARHUDFM is lower than the cost of one M1128 155 mm artillery shell (~$4000)

- a full kit of gear is 47% cheaper and 2 pounds lighter, but the difference in capabilities is huge

Conclusion

It seems to us that this is a serious problem, since with several wounded with acute massive blood loss, the medic (orderly) simply will not have enough plasma substitute to save lives until the moment of evacuation and delivery to the hospital (average 2-4 hours). The same goes for the speed at which the medic can start "Care Under Fire" and his ability to treat multiple casualties at the same time.